Car Accessory Design - HoloLens

Car Accessory Design - HoloLens

The Car Customization App on HoloLens offers users an immersive way to customize and visualize their car accessories in real-time using augmented reality. Leveraging Microsoft HoloLens, this app allows users to interact with a holographic representation of a car, selecting from a variety of parts like wheels, spoilers, and floor mats and customizing them with gestures or voice commands. The success of this project helped secure a partnership with Microsoft, showcasing the app’s ability to effectively integrate HoloLens’ advanced spatial and interaction technologies.

Roles:

Interaction Designer: Responsible for designing the user interactions with both gestures and voice commands.

Developer: Involved in coding the app, integrating spatial understanding and ensuring real-time rendering of 3D objects.

Tools:

Microsoft HoloLens SDK: For building mixed reality experiences.

Unity 3D: Used for 3D modeling, rendering, and interaction design.

Visual Studio: For developing the backend code and debugging.

Figma/Sketch: For designing UI mockups and flows.

Azure Spatial Anchors: To enhance spatial understanding and persistence of holographic objects in real-world environments.

Project Duration:

6 months

In designing the Car Customization App on HoloLens, we identified several critical areas that influenced both the design and functionality of the app. The following mind map outlines key factors, including the problem definition, the target audience, user interactions, and future enhancements:

This visual framework helped guide the project, ensuring that we addressed both user and technical requirements while also planning for future improvements.

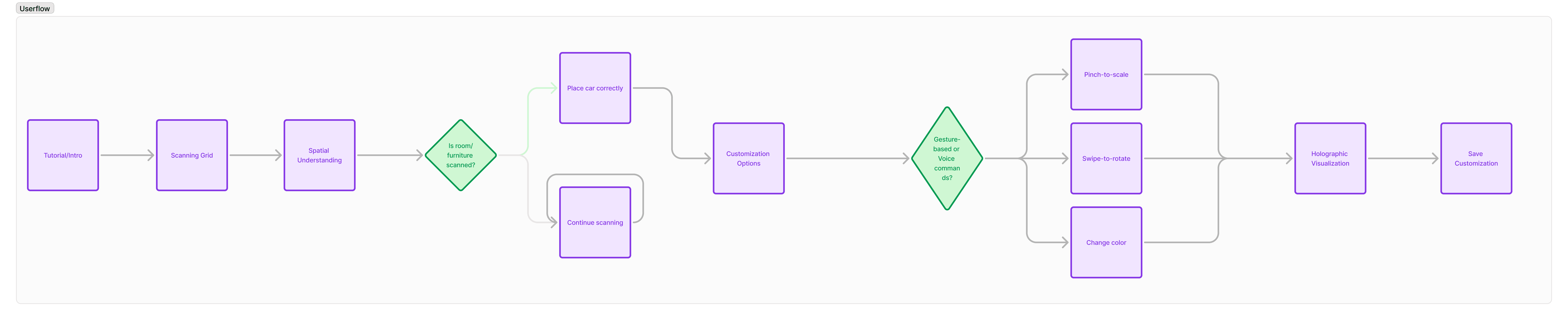

User Flow Overview

The flow of the app starts with a tutorial that introduces users to the core interactions—using gestures like air taps or voice commands to modify a car’s accessories. Once users are comfortable with the controls, the app scans their physical environment, leveraging HoloLens' spatial understanding capabilities. After the room is mapped, users can select and customize accessories, applying them to the holographic car in real-time.

User Flow:

Tutorial/Intro: The user is welcomed with an interactive tutorial explaining how to interact with the app using hand gestures or voice commands.

Scanning Grid & Spatial Understanding: Once the tutorial is complete, the app begins scanning the user’s room to understand the available space for placing the car. This ensures that the holographic car is correctly scaled and placed within the environment.

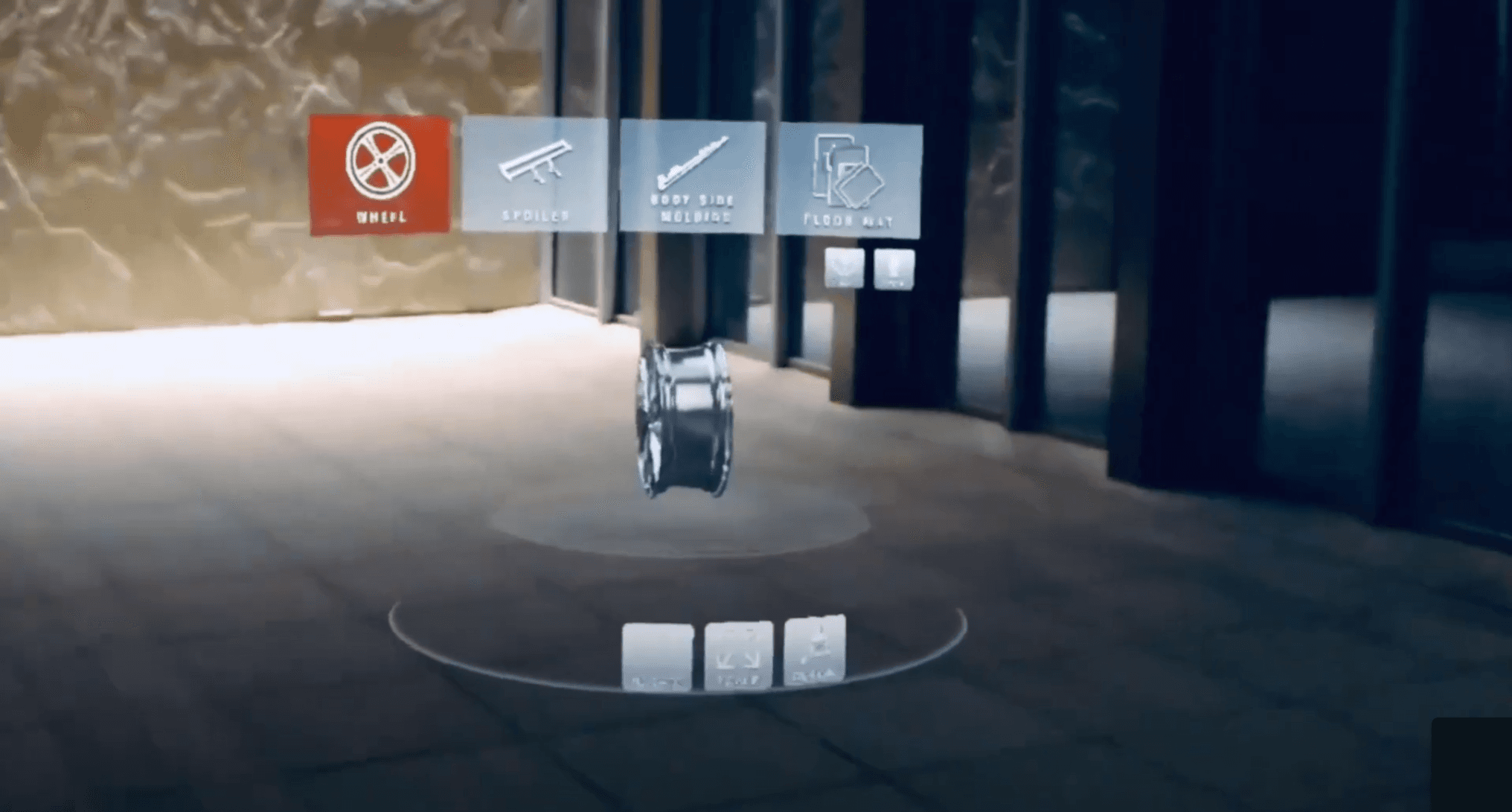

Customization Options: Users then enter the customization mode, where they can choose from a variety of accessories, such as wheels, spoilers, and floor mats, to apply to their car.

Gesture or Voice Command Decision: Users can either modify their selected car accessory using gestures (pinch-to-scale, swipe-to-rotate) or issue voice commands such as "Change wheel color to red" or "Increase spoiler size."

Spatial Understanding Process

The spatial understanding process begins with the app scanning the user’s environment to accurately place the holographic car. If the room or furniture has been adequately scanned, the app places the car in the correct position; otherwise, it continues to scan for further detail. This process ensures that the car fits naturally into the user’s space.

Customization Interaction

Gesture-Based Customization

Once accessories are selected, users can interact with them using hand gestures like pinch-to-scale and swipe-to-rotate. For example, users can zoom in to adjust the size of the wheels or rotate the car to view how the spoiler looks from different angles. This allows for highly interactive, real-time customization.

Voice Command-Based Customization

Users can also use voice commands to modify accessories. Commands like “Change wheel color to red” or “Rotate spoiler” allow for hands-free interaction, making it a seamless experience, especially in scenarios where gesture-based inputs might not be ideal.

Holographic Visualization

Once customizations are applied, the app generates a real-time holographic view of the car. This feature allows users to see their modifications in a life-sized, 3D environment, enhancing the decision-making process by visualizing how the car would look with the chosen accessories. Users can rotate and zoom into the car for detailed inspections.

Learnings and Takeaways

Seamless Interaction Design: Balancing gesture-based and voice command interactions is key in mixed reality applications. While gestures offer precision, voice commands provide ease and accessibility, especially in situations where gestures may be limited. Understanding when to use each was crucial in delivering a smooth user experience.

Spatial Understanding as a Foundation: The accurate placement of holographic objects in the user’s physical space is fundamental to the success of augmented reality experiences. By investing time in optimizing the spatial understanding algorithms, we ensured that the car models would fit naturally into any environment, enhancing the immersive feel.

Collaborating with Industry Leaders: This project underscored the importance of industry collaboration. Working closely with Microsoft's Mixed Reality team allowed us to leverage the latest HoloLens technology and ensure our app was optimized for performance and scalability.

Usability and User-Centered Design: Frequent usability testing provided valuable insights into user behaviors and preferences. By testing both novice and advanced users, we discovered key areas where interactions could be simplified, particularly in the voice-command feature set.

Future Potential of Mixed Reality: The project opened up a realm of possibilities for future enhancements, such as AI-driven customization suggestions and collaborative design sessions. This sets a foundation for exploring more advanced user engagement strategies in augmented reality.