Engine Disassembly – Hololens

Engine Disassembly – Hololens

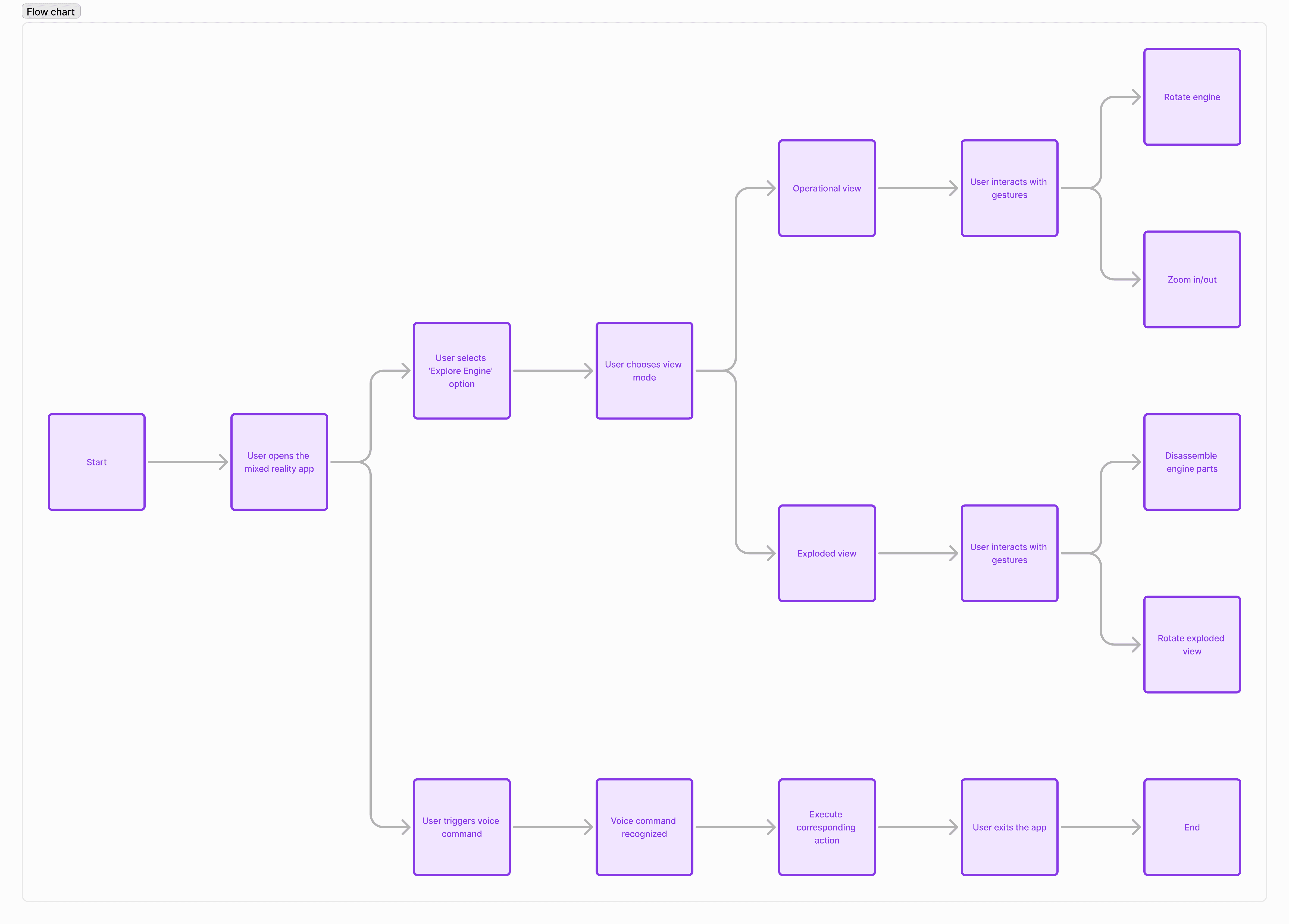

The Engine Disassembly app offers users a unique, immersive way to explore the components of an engine. Leveraging the power of Hololens, the app provides both an operational and exploded view of the engine, allowing users to interact with each part in real-time. The goal was to make complex mechanical learning more engaging and accessible by offering a hands-on experience through gesture-based and voice-command interactions.

Objective

The app's primary objective was to enable users—ranging from mechanical students to professionals—to better understand an engine's internal components and functionality. Through mixed reality, the project aimed to transform the traditionally static method of learning into a dynamic, interactive experience.

Tools

Unity

Hololens

MRTK

Auto CAD

Photoshop

Approach

Vision

Our vision for this project was to redefine how people learn about mechanical systems by creating an immersive, interactive platform that enhances engagement. By using augmented reality, I wanted to allow users to explore an engine from every angle, getting a detailed look at each component and its function within the larger system.

Challenges

One of the main challenges was ensuring that the 3D models of the engine were both accurate and optimized for the Hololens platform. Another challenge was the integration of smooth gesture and voice-based interactions to create a natural user experience. These required precise tuning to ensure accuracy and responsiveness.

Design Process

Gesture and Voice Commands

Designing interactions that felt intuitive was key. The app supported various gesture controls for actions such as rotating and zooming into engine parts. Voice commands allowed users to easily highlight specific components or receive detailed information about them. For instance, a user could say “Show pistons” to zoom in and learn about that part specifically.

To create these interactions, I mapped each gesture to a specific action, ensuring that they felt natural and aligned with common MR interaction models. Meanwhile, voice recognition was integrated into Unity to interpret commands, making the app highly accessible.

User Interface Design

The UI was designed to guide users through the interaction process while minimizing the need for extensive instruction. It provided clear visual cues and feedback, ensuring that users always understood how to proceed with their exploration. The interface was kept minimal, allowing the MR experience to take center stage.

User-Centric Design

Throughout the development process, the focus remained on user needs. Whether they were students trying to understand the basics of engine mechanics or professionals seeking a more in-depth understanding, the app was designed to make complex systems more approachable.

Project Highlights

Immersive Learning

The Engine Disassembly app allowed users to interactively engage with the engine’s inner workings, offering both visual and auditory feedback that enriched the learning experience. By using a combination of exploded views and real-time part manipulation, users could better understand how each part contributes to the overall function of the engine.

Seamless Interactions

With the integration of gesture and voice commands, users could seamlessly interact with the app. The ability to rotate, zoom, and select parts without needing a controller made the experience more immersive and engaging. This allowed users to focus on learning without being hindered by complicated controls.

User Testing & Feedback

Several user testing sessions were conducted throughout development to ensure the app was intuitive and met the needs of its audience. Based on feedback, the gesture mapping was refined to improve responsiveness, and the voice commands were optimized for better accuracy. These iterations led to a highly functional and user-friendly experience.

Results & Impact

The Engine Disassembly app successfully demonstrated how augmented reality can be used to create interactive, engaging educational tools. Users reported a significant increase in engagement and understanding when compared to traditional learning methods. The app not only improved learning outcomes but also showed the potential for MR in education and training environments.

Key Takeaways

This project taught me the importance of intuitive interactions when designing for MR environments. The gesture and voice command integration required constant iteration and testing to ensure accuracy and responsiveness. Additionally, keeping the user at the center of every design decision was crucial in delivering an app that was both functional and enjoyable to use.