VR Co-Lab – A Virtual Reality Platform for Human-Robot Disassembly Training and Synthetic Data Generation

Introduction

Title: VR Co-Lab – A Virtual Reality Platform for Human-Robot Disassembly Training and Synthetic Data Generation

Role: Developer and Researcher

Year: 2024

Project Overview

Objective:

The primary goal of VR Co-Lab was to develop a comprehensive VR training platform to enhance human-robot collaboration in industrial disassembly tasks, with a focus on e-waste recycling. The platform integrated advanced body tracking through the Quest Pro headset and generated synthetic data during training sessions to improve and evaluate robot path planning models.

Roles:

Developer: Integrated ROS with Unity, developed VR training scenarios, and ensured smooth interaction between virtual disassembly tasks and real-time robotic control.

Researcher: Conducted research on synthetic data generation and analyzed performance data to optimize robot path planning.

Tools:

Unity Game Engine

ROS (Robot Operating System)

Quest Pro Headset

Docker

Unity ROS TCP Connector

Unity URDF Importer

JASP

Approach

Vision and Innovation:

The vision for this project was to create an immersive VR training environment that enhances collaboration between humans and robots in disassembly tasks. By leveraging synthetic data generation, I aimed to improve both training efficiency and robot path planning models.

Identifying Unique Challenges:

Some of the challenges included integrating the Quest Pro headset for precise body tracking, creating realistic VR interactions, and generating synthetic data for continuous system improvement.

Resolving Complex Problems:

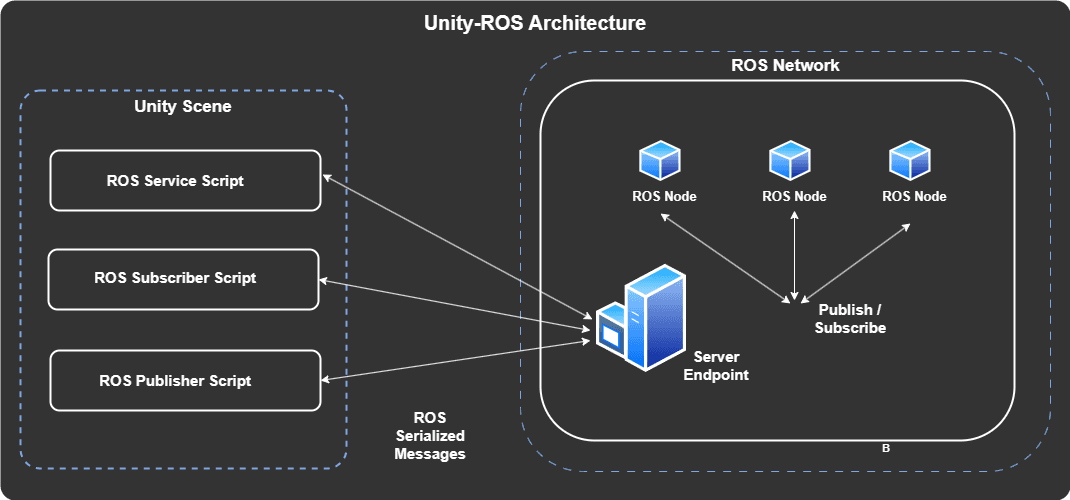

Integrating the ROS communication model with Unity was a critical task. I developed a robust system architecture that ensured seamless data exchange between the VR interface and the physical robot. Additionally, creating realistic VR training scenarios required multiple design iterations to ensure natural interactions.

User-Centric Design:

The design of the VR training system was driven by user-centric principles. Focusing on the trainees' needs and providing real-time feedback allowed us to refine the experience and ensure usability. Continuous testing and feedback played a key role in the system's evolution.

Meeting User Needs:

The platform was designed to meet the needs of users in industrial disassembly training, ensuring the system was engaging, practical, and easy to navigate.

Project Details

System Architecture:

The system was structured into three primary components:

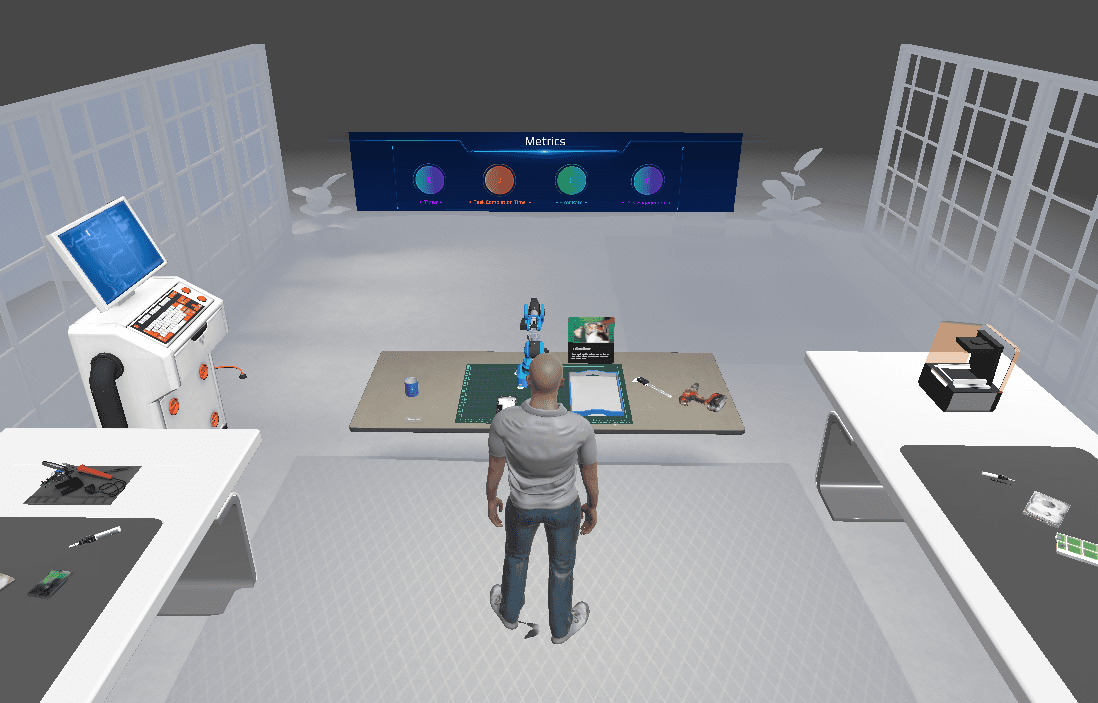

VR Environment: Developed in Unity, simulating a disassembly workstation with realistic tools and components.

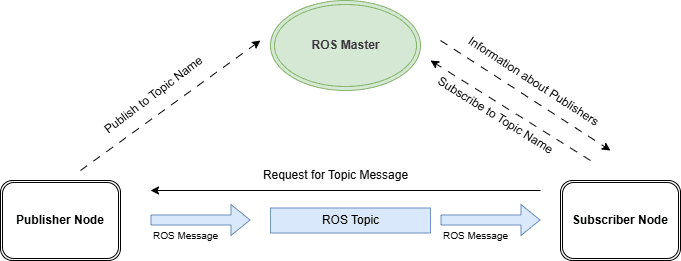

Robotic Control System: Managed by ROS, with nodes for data exchange and control.

Feedback & Data Collection: Collected performance metrics (task times, error rates) and generated synthetic data for improving robot path planning.

VR Environment:

The VR environment replicated a disassembly workstation, enabling users to interact with tools and components in real-time with immediate feedback.

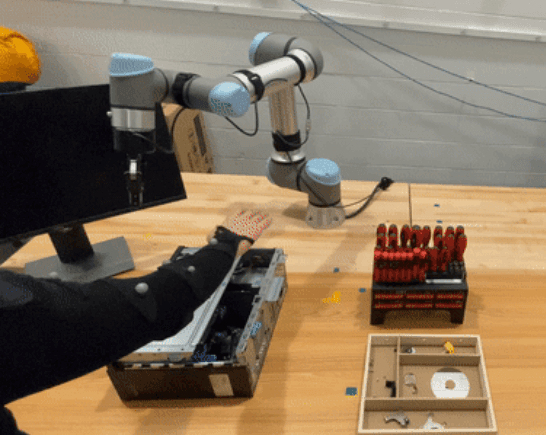

Real Environment:

The real environment used for reference.

Integration with ROS:

The ROS facilitated communication between the VR environment and the physical robot. Using Unity ROS TCP Connector and Unity URDF Importer, we ensured accurate simulation and control of the robot.

User Taskflow:

Tracked Body Points:

Using Meta's Movement SDK, various upper body points of the user are tracked to predict the user's movements wrt to the robot during the disassembly task.

Performance Monitoring & Synthetic Data Generation

:

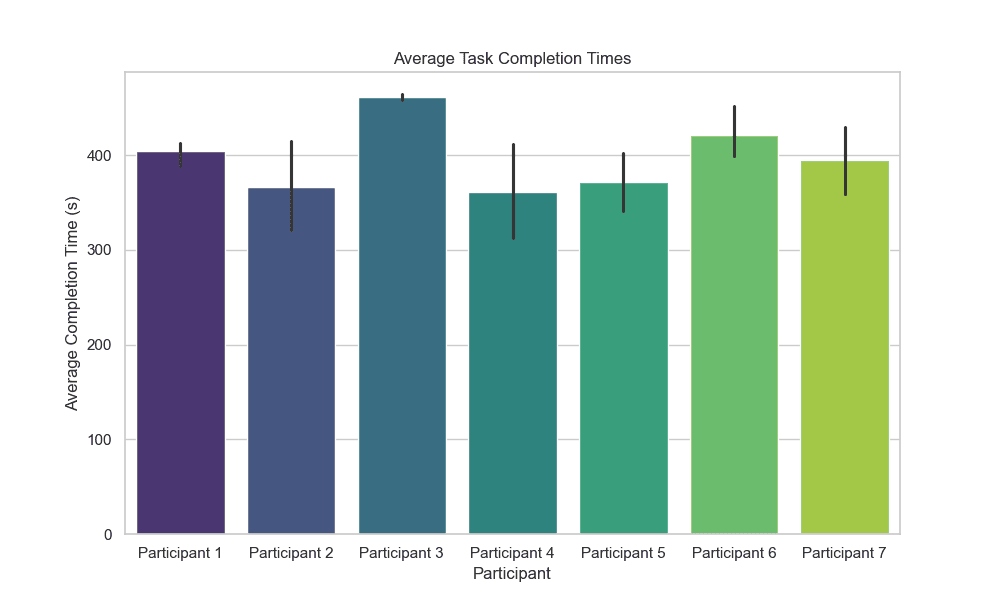

Performance metrics such as task completion times, error rates, and engagement levels were collected. This data was used to generate synthetic datasets for improving robot path planning models, ensuring continuous optimization.

Results

Results:

The VR Co-Lab platform demonstrated the potential of VR training in enhancing human-robot collaboration. The project highlighted the importance of immersive environments and synthetic data in improving both training efficiency and robot performance.

Future Enhancements:

Future improvements could include integrating eye tracking, expanding the range of disassembly tasks, and enabling multi-user training scenarios for collaborative learning.

Learnings and Takeaways

Integration of VR and Robotic Systems: Seamless integration between virtual environments and physical robots was key to the project’s success. By ensuring smooth communication between Unity and ROS, we created a system that effectively linked user actions in VR with real-world robot control.

Synthetic Data as a Key Innovation: The use of synthetic data to improve robot path planning was a major learning point. It showcased how user interactions in VR can continuously refine and enhance the real-world performance of robots, making future training sessions even more effective.

User-Centered Design: Designing for usability was paramount. The body tracking and gesture-based controls needed to be intuitive and responsive, ensuring that users could engage with the system naturally. This focus on user-centered design led to more engaging and effective training sessions.

Future Opportunities in VR Training: This project opened new possibilities for VR-based industrial training, particularly in disassembly tasks. Future enhancements could include multi-user training scenarios, AI-based assistance, and more complex disassembly tasks integrated into the platform.